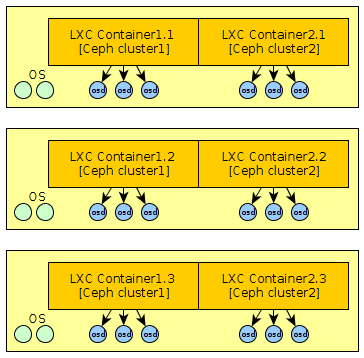

Ceph makes it easy to create multiple cluster on the same hardware with the naming of clusters. If you want a better insolation you can use LXC, for example to allow a different version of Ceph between your clusters.

For this you will need access to the physical disks from …