3.X Kernel for OpenVZ is out and it is compiled with rbd module:

root@debian:~# uname -a

Linux debian 3.10.0-3-pve #1 SMP Thu Jun 12 13:50:49 CEST 2014 x86_64 GNU/Linux

root@debian:~# modinfo rbd

filename: /lib/modules/3.10.0-3-pve …3.X Kernel for OpenVZ is out and it is compiled with rbd module:

root@debian:~# uname -a

Linux debian 3.10.0-3-pve #1 SMP Thu Jun 12 13:50:49 CEST 2014 x86_64 GNU/Linux

root@debian:~# modinfo rbd

filename: /lib/modules/3.10.0-3-pve …You have probably already be faced to migrate all objects from a pool to another, especially to change parameters that can not be modified on pool. For example, to migrate from a replicated pool to an EC pool, change EC profile, or to reduce the number of PGs... There are …

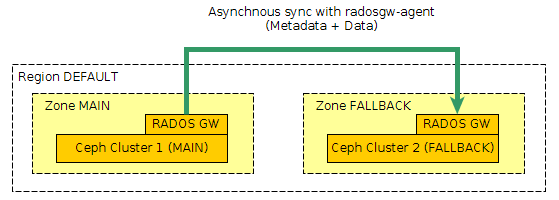

This is a simple example of federated gateways config to make an asynchonous replication between two Ceph clusters.

(Update Nov. 2016 : Since Jewel version, radosgw-agent is no more needed and active-active replication between zone is now supported. See here : http://docs.ceph.com/docs/jewel/radosgw/multisite/)

( The configuration below …

Get the PG distribution per osd in command line :

pool : 0 1 2 3 | SUM

------------------------------------------------

osd.10 6 6 6 84 | 102

osd.11 7 6 6 76 | 95

osd.12 4 4 3 56 | 67

osd.20 5 5 5 107 | 122

osd.13 3 3 3 73 | 82 …It is not always easy to know how to organize your data in the Crushmap, especially when trying to distribute the data geographically while separating different types of discs, eg SATA, SAS and SSD. Let's see what we can imagine as Crushmap hierarchy.

Take a simple example of a distribution …