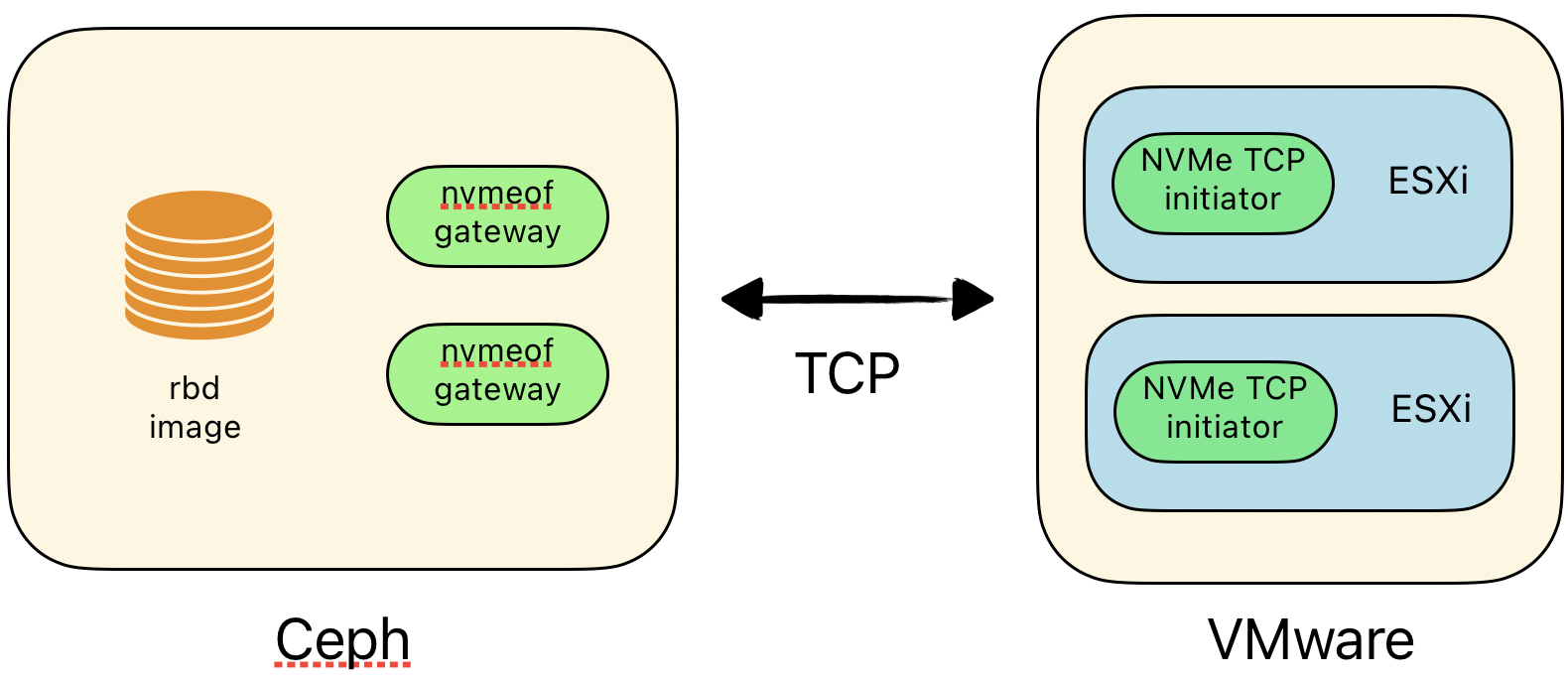

A quick test of NVMe over Fabrics (NVMe/TCP) and VMware...

Create a RBD volume

# Create a pool "nvmeof_pool01" and a rbd image inside :

ceph osd pool create nvmeof_pool01

rbd pool init nvmeof_pool01

rbd -p nvmeof_pool01 create nvme_image --size 50G

# Deploy nvmeof instance (version 1.0.0)

ceph config set mgr mgr/cephadm/container_image_nvmeof quay.io/ceph/nvmeof:1.0.0

ceph orch apply nvmeof nvmeof_pool01 --placement="ceph01"

Configure NVME Subsystem

# Create an alias to simplify the following commands

# I use the same version of nvmeof-cli:1.0.0, 192.168.10.11 is the host ceph01

# I run those commands directly on ceph host but it could be on any other with podman installed

alias nvmeof-cli='podman run -it quay.io/ceph/nvmeof-cli:1.0.0 --server-address 192.168.10.11 --server-port 5500'

# Create the subsystem, the namespace corresponding the rbd image, and the listener

nvmeof-cli subsystem add --subsystem nqn.2016-06.io.spdk:ceph

nvmeof-cli namespace add --subsystem nqn.2016-06.io.spdk:ceph --rbd-pool nvmeof_pool01 --rbd-image nvme_image

nvmeof-cli listener add --subsystem nqn.2016-06.io.spdk:ceph --gateway-name client.nvmeof.nvmeof_pool01.ceph01.htrgqe --traddr 192.168.10.11 --trsvcid 4420

# Allow all clients (to allow specific esxi clients, retreive NQN address with "esxcli nvme info get")

nvmeof-cli host add --subsystem nqn.2016-06.io.spdk:ceph --host "*"

# List all subsystems

nvmeof-cli subsystem list

╒═══════════╤════════════════════════════╤════════════╤═══════════════════╤══════════════════════╤══════════════════╤═════════════╕

│ Subtype │ NQN │ HA State │ Serial Number │ Model Number │ Controller IDs │ Namespace │

│ │ │ │ │ │ │ Count │

╞═══════════╪════════════════════════════╪════════════╪═══════════════════╪══════════════════════╪══════════════════╪═════════════╡

│ NVMe │ nqn.2016-06.io.spdk:ceph │ disabled │ SPDK4675192209286 │ SPDK bdev Controller │ 1-65519 │ 1 │

╘═══════════╧════════════════════════════╧════════════╧═══════════════════╧══════════════════════╧══════════════════╧═════════════╛

# List namespace

nvmeof-cli namespace list --subsystem nqn.2016-06.io.spdk:ceph

╒════════╤════════════════════════╤═══════════════╤════════════╤═════════╤═════════╤═════════════════════╤═════════════╤═══════════╤═══════════╤════════════╤═════════════╕

│ NSID │ Bdev │ RBD │ RBD │ Image │ Block │ UUID │ Load │ R/W IOs │ R/W MBs │ Read MBs │ Write MBs │

│ │ Name │ Pool │ Image │ Size │ Size │ │ Balancing │ per │ per │ per │ per │

│ │ │ │ │ │ │ │ Group │ second │ second │ second │ second │

╞════════╪════════════════════════╪═══════════════╪════════════╪═════════╪═════════╪═════════════════════╪═════════════╪═══════════╪═══════════╪════════════╪═════════════╡

│ 1 │ bdev_df4877b5-79b8- │ nvmeof_pool01 │ nvme_image │ 50 GiB │ 512 B │ df4877b5-79b8-41e0- │ <n/a> │ unlimited │ unlimited │ unlimited │ unlimited │

│ │ 41e0-95f1-2c9aa222a513 │ │ │ │ │ 95f1-2c9aa222a513 │ │ │ │ │ │

╘════════╧════════════════════════╧═══════════════╧════════════╧═════════╧═════════╧═════════════════════╧═════════════╧═══════════╧═══════════╧════════════╧═════════════╛

# List hosts allowed

nvmeof-cli host list --subsystem nqn.2016-06.io.spdk:ceph

╒════════════╕

│ Host NQN │

╞════════════╡

│ Any host │

╘════════════╛

Configure NVME on ESXi

In this case I use a single network. A dedicated and redundant network with multiple initiators would be better...

This is done on all ESXi hosts :

[root@esxi01:~] esxcli nvme fabrics enable --protocol TCP --device vmnic0

[root@esxi01:~] esxcli network ip interface tag add --interface-name vmk0 --tagname NVMeTCP

Test discovery :

[root@esxi01:~] esxcli nvme fabrics discover -a vmhba65 -i 192.168.10.11 -p 8009

Transport Type Address Family Subsystem Type Controller ID Admin Queue Max Size Transport Address Transport Service ID Subsystem NQN Connected

-------------- -------------- -------------- ------------- -------------------- ----------------- -------------------- -------------------------- ---------

TCP IPv4 NVM 65535 128 192.168.10.11 4420 nqn.2016-06.io.spdk:ceph true

Connect and list namespaces :

[root@esxi01:~] esxcli nvme fabrics connect -a vmhba65 -i 192.168.10.11 -p 4420 -s nqn.2016-06.io.spdk:ceph

[root@esxi01:~] esxcli nvme namespace list

Name Controller Number Namespace ID Block Size Capacity in MB

------------------------------------ ----------------- ------------ ---------- --------------

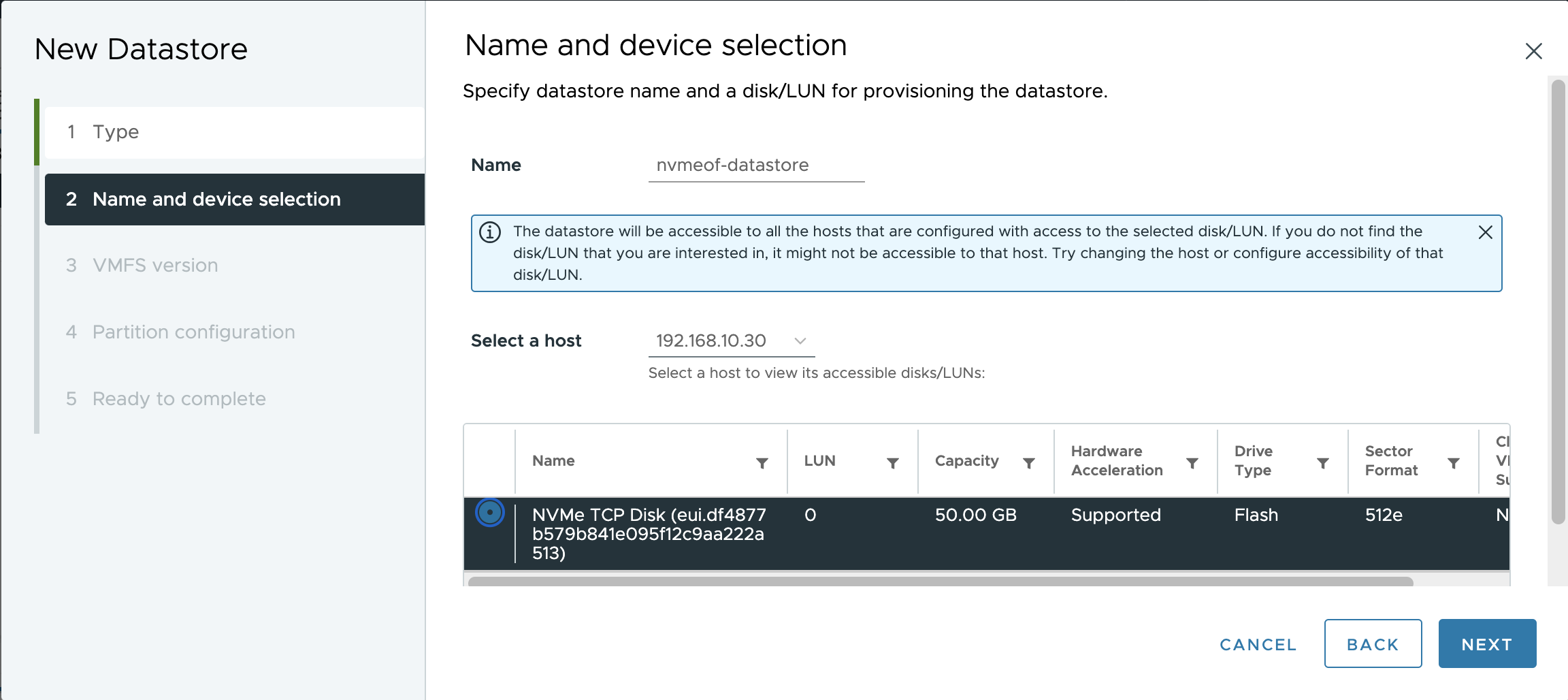

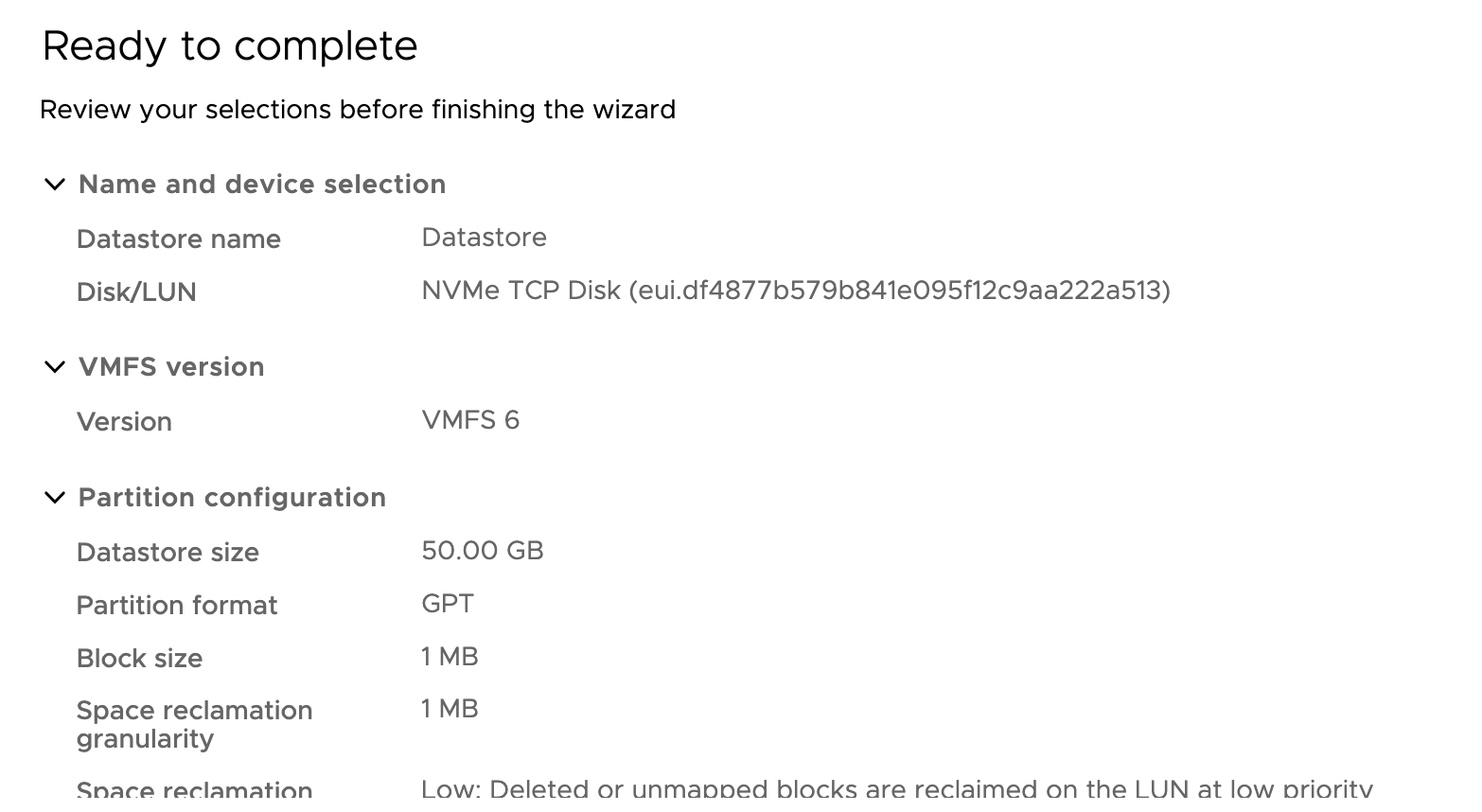

eui.df4877b579b841e095f12c9aa222a513 256 1 512 51200

Add the datastore from VSphere client :

This promises interesting things...

References :

Ceph NVMEOF Github : https://github.com/ceph/ceph-nvmeof

IBM Ceph Docs NVME-oF Tech Preview : https://www.ibm.com/docs/.../devices-ceph-nvme-gateway-technology-preview

RedHat Doc : Configuring NVMe over fabrics using NVMe/TCP https://access.redhat.com/documentation/.../configuring-nvme-over-fabrics...

Red Hat Ceph Storage: A use case study into ESXi host attach over NVMeTCP : https://community.ibm.com/.../red-hat-ceph-storage-esxi-nvmetcp

VMWare : Configuring NVMe over TCP on ESXi : https://docs.vmware.com/...

ESXI nvme commands : https://vdc-repo.vmware.com/.../esxcli_nvme.html

NVM Express - TCP Transport Specification : hhttps://nvmexpress.org/.../NVM-Express-TCP-Transport-Specification-1.0d-2023.12.27-Ratified.pdf