You have probably already be faced to migrate all objects from a pool to another, especially to change parameters that can not be modified on pool. For example, to migrate from a replicated pool to an EC pool, change EC profile, or to reduce the number of PGs... There are different methods, depending on the contents of the pool (RBD, objects), size...

The simple way

The simplest and safest method to copy all objects with the "rados cppool" command. However, it need to have read only access to the pool during the copy.

For example for migrating to an EC pool :

pool=testpool

ceph osd pool create $pool.new 4096 4096 erasure default

rados cppool $pool $pool.new

ceph osd pool rename $pool $pool.old

ceph osd pool rename $pool.new $pool

But it does not work in all cases. For example with EC pools : "error copying pool testpool => newpool: (95) Operation not supported".

Using Cache Tier

This must to be used with caution, make tests before using it on a cluster in production. It worked for my needs, but I can not say that it works in all cases.

I find this method interesting method, because it allows transparent operation, reduce downtime and avoid to duplicate all data. The principle is simple: use the cache tier, but in reverse order.

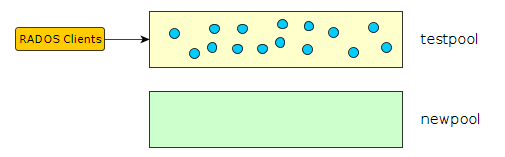

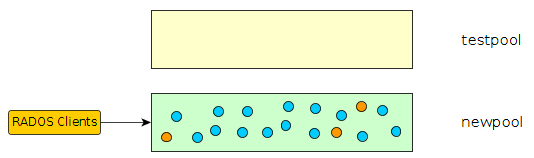

At the begning, we have 2 pools : the current "testpool", and the new one "newpool"

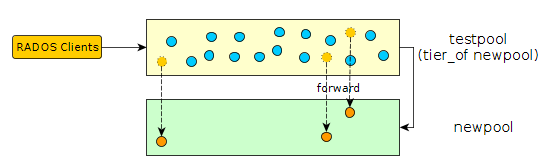

Setup cache tier

Configure the existing pool as cache pool :

ceph osd tier add newpool testpool --force-nonempty

ceph osd tier cache-mode testpool forward

In ceph osd dump you should see something like that :

--> pool 58 'testpool' replicated size 3 .... tier_of 80

Now, all new objects will be create on new pool :

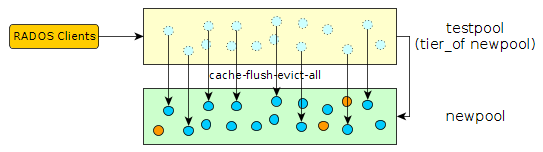

Now we can force to move all objects to new pool :

rados -p testpool cache-flush-evict-all

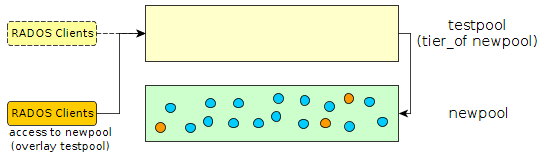

Switch all clients to the new pool

(You can also do this step earlier. For example, just after the cache pool creation.) Until all the data has not been flushed to the new pool you need to specify an overlay to search objects on old pool :

ceph osd tier set-overlay newpool testpool

In ceph osd dump you should see something like that :

--> pool 80 'newpool' replicated size 3 .... tiers 58 read_tier 58 write_tier 58

With overlay, all operation will be forwarded to the old testpool :

Now you can switch all the clients to access objects on the new pool.

Finish

When all data is migrate, you can remove overlay and old "cache" pool :

ceph osd tier remove-overlay newpool

ceph osd tier remove newpool testpool

In-use object

During eviction you can find some error :

....

rb.0.59189e.2ae8944a.000000000001

rb.0.59189e.2ae8944a.000000000023

rb.0.59189e.2ae8944a.000000000006

testrbd.rbd

failed to evict testrbd.rbd: (16) Device or resource busy

rb.0.59189e.2ae8944a.000000000000

rb.0.59189e.2ae8944a.000000000026

...

List watcher on object can help :

rados -p testpool listwatchers testrbd.rbd

watcher=10.20.6.39:0/3318181122 client.5520194 cookie=1

Using Rados Export/Import

For this, you need to use a temporary local directory.

rados export --create testpool tmp_dir

[exported] rb.0.4975.2ae8944a.000000002391

[exported] rb.0.4975.2ae8944a.000000004abc

[exported] rb.0.4975.2ae8944a.0000000018ce

...

rados import tmp_dir newpool

# Stop All IO

# And redo a sync of modified objects

rados export --workers 5 testpool tmp_dir

rados import --workers 5 tmp_dir newpool